User home directory storage#

All users on all the hubs get a home directory with persistent storage.

This is made available through cloud-specific filestores.

Managed NFS Server setup#

Terraform is setup to provision managed NFS server using the following cloud specific implementations.

GCP: Google Filestore

Azure: Files

AWS: Elastic File System

NFS Client setup#

For each hub, there needs to be the components described by the following subsections.

Hub directory#

A directory is created under the path defined by the nfs.pv.baseShareName cluster config.

Usually, this is /homes - for hubs that use the managed NFS provider for the cloud platform.

Created using the infrastructure described in the terraform section.

This the the base directory under which each hub has a directory (nfs.pv.baseShareName).

This is done through a job that’s created for each deployment via helm hooks that will mount nfs.pv.baseShareName, and make sure the directory for the hub is present on the NFS server with appropriate permissions.

Note

The NFS share creator job will be created pre-deploy, run, and cleaned up before deployment proceeds. Ideally, this would only happen once per hub setup - but we don’t have a clear way to do that yet.

Hub user mount#

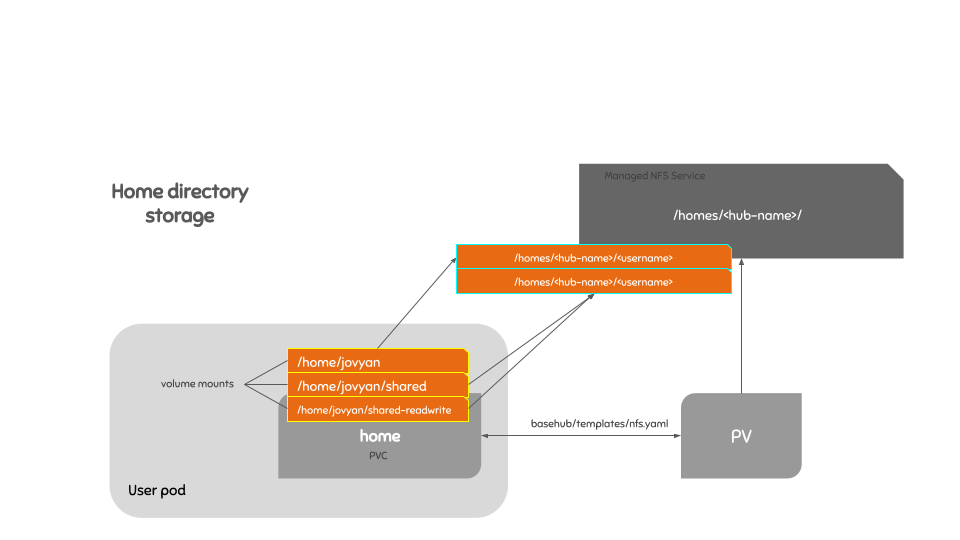

For each hub, a PersistentVolumeClaim(PVC) and a PersistentVolume(PV) are created.

This is the Kubernetes Volume that refers to the actual storage on the NFS server.

The volume points to the hub directory created for the hub and user at <hub-directory-path>/<hub-name>/<username>

(this name is dynamically determined as a combination of nfs.pv.baseShareName and the current release name).

Z2jh then mounts the PVC on each user pod as a volume named home.

Parts of the home volume are mounted in different places for the users, as detailed below.

User home directories#

Z2jh will mount into /home/jovyan (the mount path) the contents of the path <hub-directory-path>/<hub-name>/<username> on the NFS storage server.

Note that <username> is specified as a subPath - the subdirectory in the volume to mount at that given location.

(Optional) The allusers directory#

Can be mounted just for admins, showing the contents of <hub-directory-path>/<hub-name>/.

This volumeMount can be made readOnly if the following volume property is set: readOnly: true.

If it is not specified, then by default, it is NOT readOnly, so admins can write to it.

It’s purpose is to give access to the hub admins to all the users home directory to read and/or modify.

jupyterhub:

custom:

singleuserAdmin:

extraVolumeMounts:

- name: home

mountPath: /home/jovyan/allusers

# Uncomment the line below to make the directory readonly for admins

# readOnly: true

- name: home

mountPath: /home/rstudio/allusers

# Uncomment the line below to make the directory readonly for admins

# readOnly: true

# mounts below are copied from basehub's values that we override by

# specifying extraVolumeMounts (lists get overridden when helm values

# are combined)

- name: home

mountPath: /home/jovyan/shared-readwrite

subPath: _shared

- name: home

mountPath: /home/rstudio/shared-readwrite

subPath: _shared